Alon Gubkin

Aporia | Co-Founder & CTO

Machine learning model monitoring measures of how well your machine learning model performs a task during training and in real-time deployment. As ML engineers, we define performance measures such as accuracy, F1 score, Recall, etc., which compare the predictions of a machine learning model with the known values of the dependent variable in a dataset.

When models are deployed to production, there is often a discrepancy between the original training data and dynamic data in the production environment. This causes the performance of a production model to degrade over time.

Machine learning model monitoring measures how well your machine learning models perform a task during training and in real-time deployment. As machine learning engineers and data scientists, we define performance metrics such as accuracy, F1 score, Recall, etc., which compare the predictions of machine learning models with the known values of the dependent variable in a dataset.

When machine learning models are deployed to production, there is often a discrepancy between the original data from model training and dynamic data in the production environment. This causes model performance to degrade over time.

For this reason, continuous tracking and monitoring of these performance metrics are critical for improving model performance. ML model monitoring can help by:

These insights allow data scientists and ML teams to identify the root cause of problems, and make better decisions on how to evolve and update models to improve accuracy in production.

In life, as well as in business, feedback loops are essential. The concept of feedback loops is simple: You produce something, measure how it performs, and then improve it. This is a constant process of model monitoring and improving. Machine learning models can certainly benefit from feedback loops if they contain measurable information and room for improvement. ML monitoring tools can provide that much-needed feedback.

Consider that you trained your model to detect credit card fraud based on pre-COVID user data. During a pandemic, credit card use and buying habits change. Such changes potentially expose your model to data from a distribution with which the model was not trained. This is an example of data drift, one of several sources of model degradation. Without ML monitoring, your model will output incorrect predictions with no warning signs, which will negatively impact your customers and your organization in the long run.

AI hallucinations can severely degrade the performance of models, particularly in high-stakes environments such as healthcare, where a single incorrect output could lead to a misdiagnosis with serious health implications. It’s essential to monitor and mitigate these hallucinations in LLMs to ensure the accuracy and safety of AI applications, maintaining user trust and reliability in decision-making processes.

Related content: 5 Reasons Your ML Model May Be Underperforming in Production

Model building is usually an iterative process, so using a metric stack for model monitoring is crucial to perform continuous improvement as the feedback received from the deployed ML model can be funneled back to the model building stage. It’s essential to know how well your machine learning models perform over time. To do this, you’ll need ML model monitoring tools that effectively monitor the model performance metrics of everything from concept drift to how well your algorithm performs with new data.

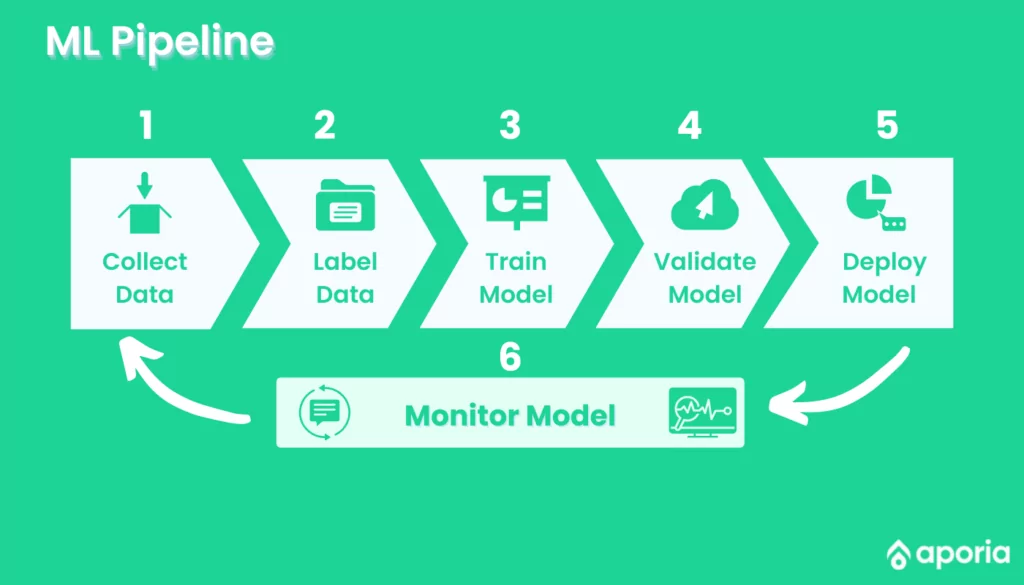

Several steps are involved in a typical ML workflow, including data ingestion, preprocessing, model building, evaluation, and deployment. Feedback, however, is missing from this workflow.

A primary goal of ML monitoring is to provide this feedback loop, feeding data from the production environment into the model building phase. This allows the machine learning models to continuously improve themselves by either updating or using an existing model.

Detecting model drift involves monitoring the performance of a machine learning model over time to identify any degradation in its performance. This can be achieved through various methods:

Find out more about model drift in our blog ‘What is Model Drift and 5 Ways to Prevent It’.

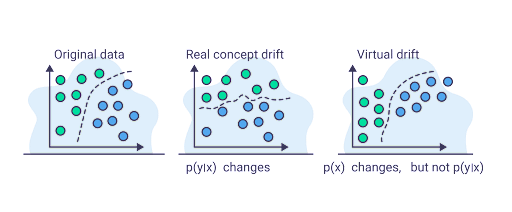

Data drift occurs due to changes in your input or source data. Therefore, to detect data drift, you must observe your model’s input data in production and compare that to your original data from the training phase. A strong indication your model is experiencing data drift can be noticed when training and production data don’t share the same format or distribution.

For example, in the case of changes in data format, consider that you trained a machine learning model for house price prediction. In production, ensure that the input matrix has the same columns as the data you used during training. Changes in the distribution of the source data relative to the original data will require statistical techniques to detect.

The following tests can be used to detect data drift and changes in the distribution of the input data:

You can detect concept drift by detecting changes in prediction probabilities given the input. Detecting changes in your model’s output given production inputs could indicate changes at a level of analysis where you are not operating.

For example, if your house price classification model is not accounting for inflation, your model will start underestimating house prices. You can also detect drift through ML monitoring tools and techniques, such as performance monitoring. Observing a change in the accuracy of your model or the classification confidence could indicate concept drift.

Here are three ways to prevent concept drift:

To define what is considered poor performance in monitoring the performance of a machine learning model, we need to clearly define what is poor performance. This typically means specifying an accuracy score or error as the expected value and observing any deviation from the expected performance over time.

In practice, data scientists understand that a machine learning model will not perform as well on real-world data as the test data used during development. Additionally, real-world data is very likely to change over time. For these reasons, we can expect and tolerate some level of performance decay once the model is deployed. To this end, we use an upper and lower bound for the expected performance of the model. The data science team should carefully choose the parameters that define expected performance in collaboration with subject matter experts.

Performance decay has very different consequences depending on the use case. The level of performance decay acceptable thus depends on the application of the machine learning model. For example, we may tolerate a 3% accuracy decrease on an animal sound classification app, but a 3% accuracy decrease would be unacceptable for a brain tumor detection system.

the model trains every time new data is available, instead of waiting to accumulate a large dataset and then retraining the model.

Performance monitoring helps us detect that a production ML model is underperforming and understand why it is underperforming. Monitoring ML performance often includes monitoring model activity, metric change, model staleness (or freshness), and performance degradation. The insights gained through ML performance monitoring will advise changes to make to improve performance, such as hyperparameter tuning, transfer learning, model retraining, developing a new model, and more.

Monitoring performance depends on the model‘s task. An image classification model would use accuracy as the performance metric, but mean squared error (MSE) is better for a regression model.

It is important to understand that a bad performance does not mean that model performance is degrading. For example, when using MSE, we can expect that sensitivity to outliers will decrease the model’s performance over a given batch. However, observing this degradation does not indicate that the model’s performance is getting worse. It is simply an artifact of having an outlier in the input data while using MSE as your metric.

In monitoring the performance of an ML model, we need to clearly define what is poor performance. This typically means specifying an accuracy score or error as the expected value and observing any deviation from the expected performance over time.

In practice, data scientists understand that a model will not perform as well on real-world data as the test data used during development. Additionally, real-world data is very likely to change over time. For these reasons, we can expect and tolerate some level of performance decay once the model is deployed. To this end, we use an upper and lower bound for the expected performance of the model. The data science team should carefully choose the parameters that define expected performance in collaboration with subject matter experts.

Performance decay has very different consequences depending on the use case. The level of performance decay acceptable thus depends on the application of the model. For example, we may tolerate a 3% accuracy decrease on an animal sound classification app, but a 3% accuracy decrease would be unacceptable for a brain tumor detection system.

Machine learning performance monitoring tools are valuable to detect when a production model is underperforming and what we can do to improve. To remediate issues in an underperforming model, it is helpful to:

ML monitoring can be more effective with a dedicated monitoring solution. Look for the following features when selecting ML monitoring tools:

Checking the input data establishes a short feedback loop to quickly detect when the production model starts underperforming.

Model monitoring is important to ensure consistent performance, maintain accuracy, identify and address data drift or concept drift, and ensure compliance with ethical and legal standards. It enables timely updates and improvements, enhancing the overall reliability and effectiveness of the model.

Measuring machine learning performance involves selecting appropriate evaluation metrics, splitting the data into training and testing sets, and using techniques like cross-validation for robust assessment. Common metrics include accuracy, precision, recall, F1-score, and AUC-ROC. The choice of metrics depends on the problem type and specific requirements, such as prioritizing false positives over false negatives. To ensure model generalization, use techniques like k-fold cross-validation, which trains and validates the model on different data subsets multiple times, providing an averaged performance measure.

Monitor ML models by tracking performance metrics and system characteristics for optimal performance, reliability, and scalability. Employ data monitoring, model evaluation, and resource tracking. Analyze data quality, distribution, and drift; track metrics like accuracy, F1-score, and AUC-ROC for classification or MSE, MAE, and R-squared for regression. Assess resource utilization by monitoring GPU/CPU usage, memory, and latency. Use tools like Aporia, TensorBoard, MLflow, or Prometheus to streamline monitoring and ensure stability, accuracy, and efficiency.

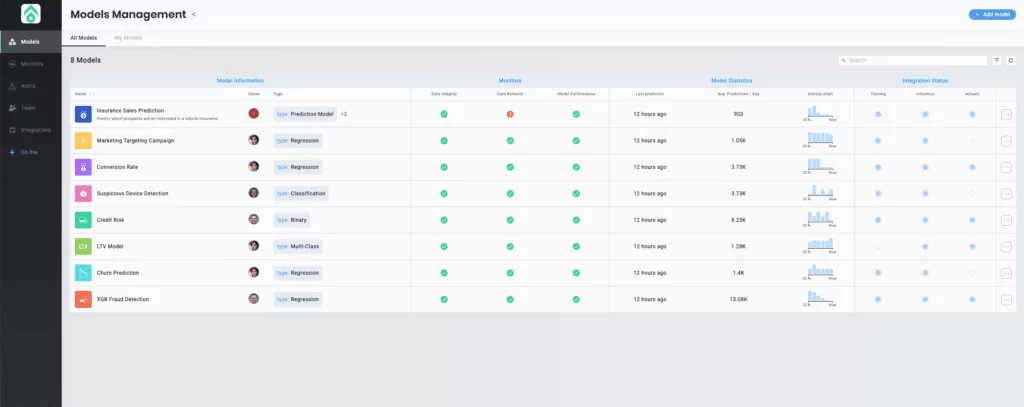

Aporia is a machine learning observability platform. Data science and ML teams from every industry trust Aporia to track model behavior, ensure peak model performance, and easily scale production ML. Aporia supports all machine learning use cases and model types by enabling complete customization of your ML observability experience. Use Aporia and gain key abilities to monitor, explain, and improve production models:

Let’s take a look and see how Aporia’s ML observability platform works.

1. Add as many models as you need, and get a live centralized view of all your production models.

2. Choose a model and dive into its predictions. Slice and dice segments, customize widgets, and get a full view of the behavior and health of your model in production.

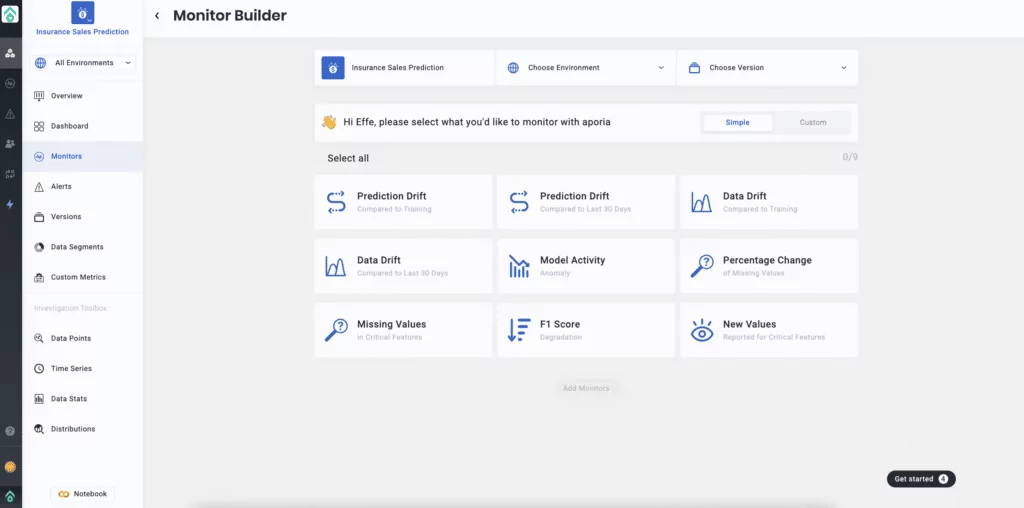

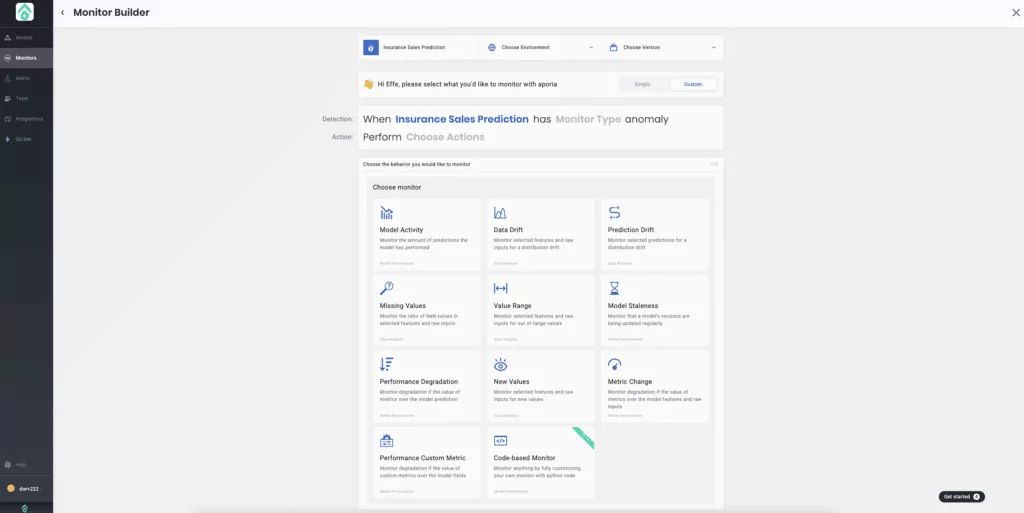

3. Let’s start monitoring your model. You can choose from our automated pre-configured monitors, or…

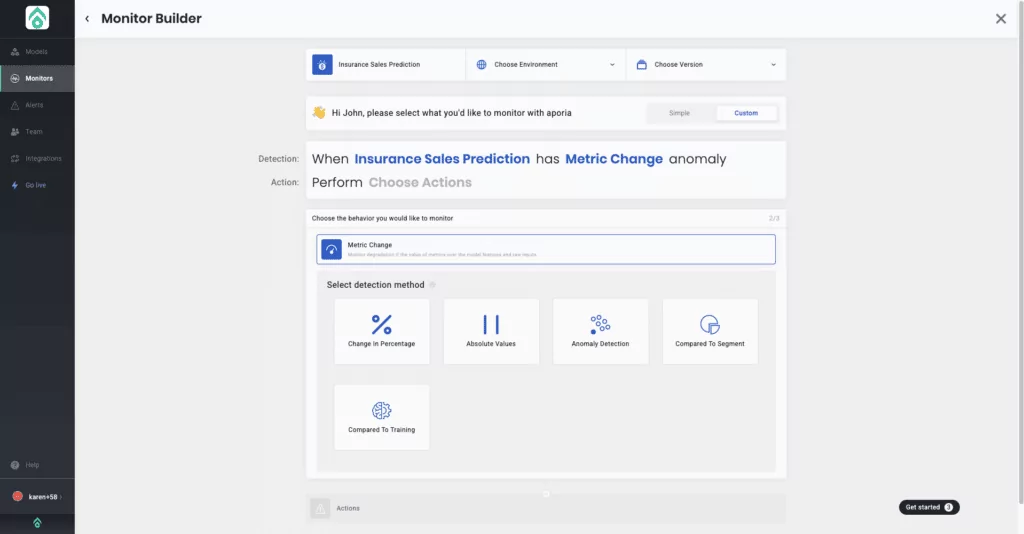

4. Create a customized monitor to track your model for drift, performance degradation, model decay, and more.

5. Determine your detection method.

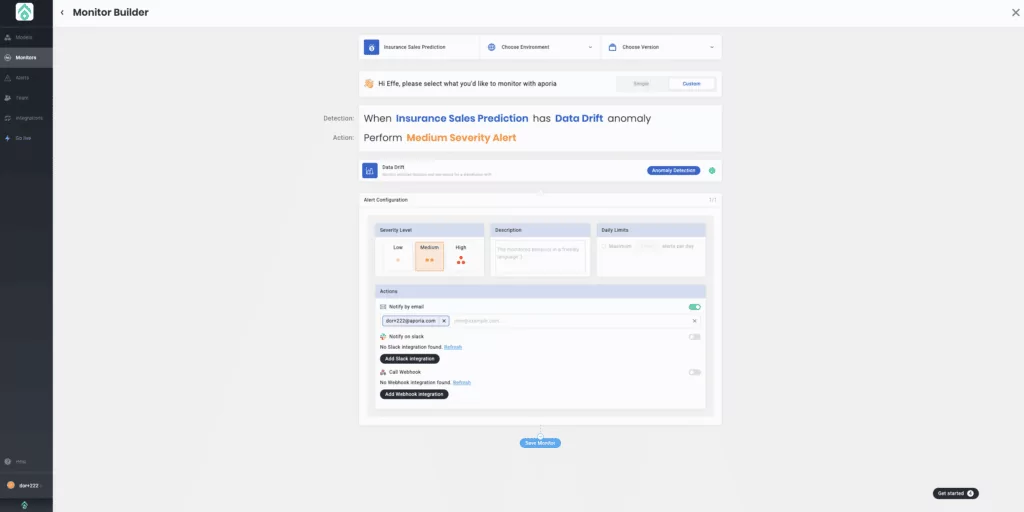

6. Now, it’s time to choose which behavior you want to monitor.

7. Configure alerts and integrate your preferred alert communication channels.

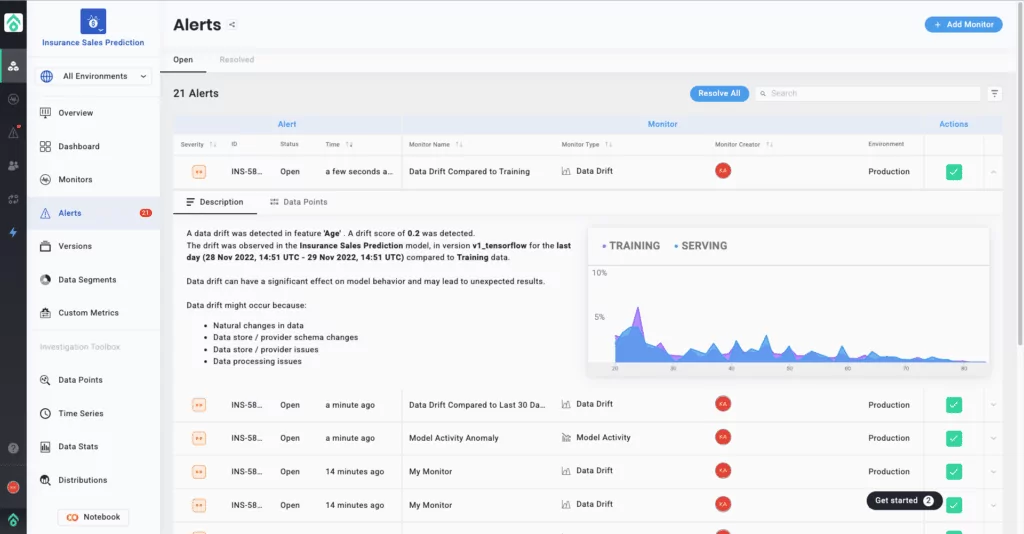

8. Got an alert? Drill down into your model issues, and understand where, when, and why it was triggered.

9. Easily explain your predictions in human readable text and simulate “What if?” scenarios with Aporia’s XAI. Re-explain your predictions to determine the most impactful features.

Want to learn more about Aporia’s machine learning observability, we recommend: