When a function is applied to a column of a DataFrame, the values in all the rows are affected by the operation that function does. In this short how-to article, we will learn how to apply a function to two columns in Pandas and PySpark DataFrames.

Pandas

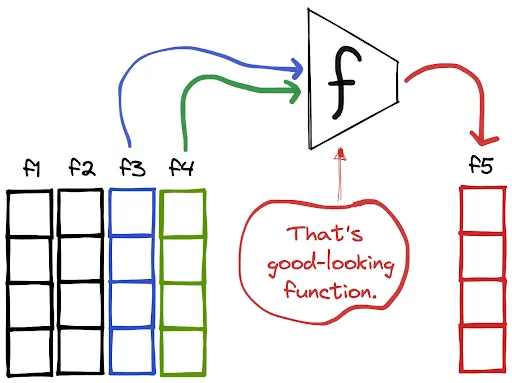

Consider we have a user-defined function f that takes two input values. We can apply this function to two columns of a DataFrame using the apply function and a lambda expression. If this function returns a single value, we can even create a new column with the values it returns.

Let’s say we have first and last name columns and want to create a new column containing the initials. The find_initials function defined below does this operation. We can apply it to the first name and last name columns as follows:

# Defining the function

def find_initials(fname, lname):

return fname[0] + lname[0]

# Applying it to two columns

df["initials"] = df.apply(lambda x: find_initials(x["fname"], x["lname"]), axis=1)PySpark

The operations are similar but we need an additional step to create a user-defined function (udf).

# importing necessary modules

from pyspark.sql import functions as F

from pyspark.sql.types import StringType

# Defining the function

def take_initials(fname, lname):

return fname[0] + lname[0]

# Creating a udf

udf = F.udf(take_initials, StringType())

# Applying it to two columns

df = df.withColumn("initials", udf(F.col("fname"), F.col("lname")))It is important to note that performing a row-wise operation in both Pandas and PySpark is expensive and not preferred if there is another way. The alternative is to use vectorized operations.

This question is also being asked as:

- How to apply custom function to Pandas DataFrame for each row