Monitoring LLMs: Metrics, Challenges, & Hallucinations

This guide will guide you through the challenges and strategies of monitoring Large Language Models. We’ll discuss potential model pitfalls,...

Aporia has been acquired by Coralogix, instantly bringing AI security and reliability to thousands of enterprises | Read the announcement

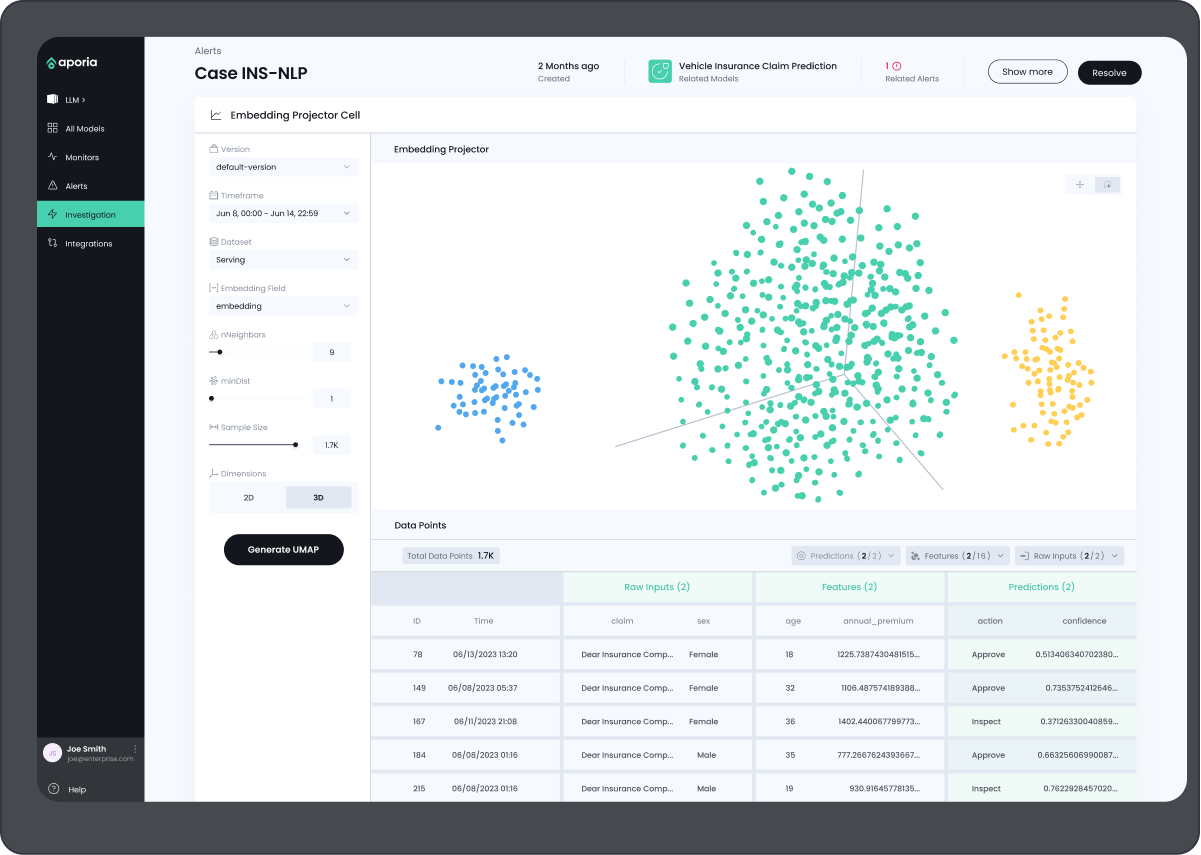

Aporia provides native support for comprehensive monitoring, sophisticated troubleshooting, and the identification of fine-tuning opportunities for large language models (LLMs) in production environments. Enhance your operational efficiency by assessing LLM responses, streamlining the process of prompt engineering, and promptly detecting any instances of LLM hallucinations.

LLMs can hallucinate and produce inaccurate or misleading responses. Monitor your model’s prompt and response embeddings in real time.

Identify, respond, and rectify inconsistencies before they impact your business operations or user experience, ensuring a seamless and error-free environment.

Maintain the accuracy and reliability of generated outputs to identify anomalies in the model's behavior over time or across different data distributions. By using Aporia to track these aspects you can proactively fine-tune LLMs, ensuring that they remain robust, fair, and effective in diverse real-world applications.

Gain comprehensive analysis of your LLM clusters by employing advanced evaluation metrics. Detect drift and performance degradation by monitoring key indicators, such as perplexity, token-level accuracy, and other customizable metrics. Effortlessly create clusters of related semantic data and organize them by performance metrics.

Identify underperforming LLM clusters and highlight clusters with low evaluation scores. To enhance response accuracy and success, use Aporia’s automated workflows to improve prompt engineering.

Decipher the underlying decision-making process to understand and interpret the model's generated output in relation to its vast training data. Aporia’s enhanced interpretability aids in addressing potential biases, ensuring trustworthiness, and facilitating the fine-tuning of LLMs, ultimately fostering safer and more robust AI systems.

Get to the heart of your LLM performance issues with advanced RCA capabilities. Diagnose and resolve complex problems by tracing them back to their origin. Address the root cause of drift and performance degradation to prevent future occurrences.

Depending on the industry, businesses may be subject to regulatory requirements that mandate monitoring and auditing of their models to ensure security, privacy, and positive LLM outcomes. Aporia's platform can help businesses meet these requirements and ensure compliance with relevant regulations and the practice of Responsible AI.

Aporia employs advanced NLP monitoring practices, such as analyzing token distribution, perplexity, and attention weights, allowing for a granular understanding of a model's behavior. This fosters continuous improvement and mitigates the risk of unintended consequences. By systematically evaluating these model-agnostic and model-specific indicators, practitioners can effectively fine-tune NLP systems to uphold robust performance, align with industry standards, and extract maximum value from unstructured textual data.