How to Build an End-To-End ML Pipeline With Databricks & Aporia

This tutorial will show you how to build a robust end-to-end ML pipeline with Databricks and Aporia. Here’s what you’ll...

Aporia has been acquired by Coralogix, instantly bringing AI security and reliability to thousands of enterprises | Read the announcement

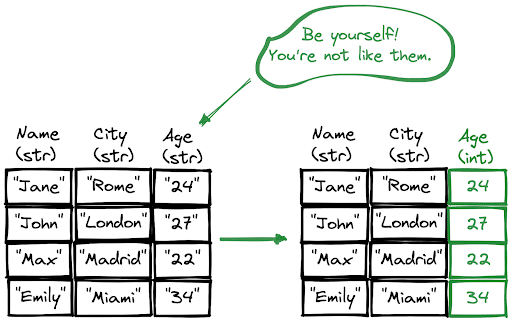

Each column in a DataFrame has a data type (dtype). Some functions and methods expect columns in a specific data type, and therefore it is a common operation to convert the data type of columns. In this short how-to article, we will learn how to change the data type of a column in Pandas and PySpark DataFrames.

In a Pandas DataFrame, we can check the data types of columns with the dtypes method.

df.dtypes

Name string

City string

Age string

dtype: objectThe astype function changes the data type of columns. Consider we have a column with numerical values but its data type is string. This is a serious issue because we cannot perform any numerical analysis on textual data.

df["Age"] = df["Age"].astype("int")We just need to write the desired data type inside the astype function. Let’s confirm the changes by checking the data types again.

df.dtypes

Name string

City string

Age int64

dtype: objectIt is possible to change the data type of multiple columns in a single operation. The columns and their data types are written as key-value pairs in a dictionary.

df = df.astype({"Age": "int", "Score": "int"})In PySpark, we can use the cast method to change the data type.

from pyspark.sql.types import IntegerType

from pyspark.sql import functions as F

# first method

df = df.withColumn("Age", df.age.cast("int"))

# second method

df = df.withColumn("Age", df.age.cast(IntegerType()))

# third method

df = df.withColumn("Age", F.col("Age").cast(IntegerType()))To change the data type of multiple columns, we can combine operations by chaining them.

df = df.withColumn("Age", df.age.cast("int")) \

.withColumn("Score", df.age.cast("int"))

This tutorial will show you how to build a robust end-to-end ML pipeline with Databricks and Aporia. Here’s what you’ll...

Dictionary is a built-in data structure of Python, which consists of key-value pairs. In this short how-to article, we will...

A row in a DataFrame can be considered as an observation with several features that are represented by columns. We...

DataFrame is a two-dimensional data structure with labeled rows and columns. Row labels are also known as the index of...

DataFrames are great for data cleaning, analysis, and visualization. However, they cannot be used in storing or transferring data. Once...

In this short how-to article, we will learn how to sort the rows of a DataFrame by the value in...

In a column with categorical or distinct values, it is important to know the number of occurrences of each value....

NaN values are also called missing values and simply indicate the data we do not have. We do not like...