Concept Drift in Machine Learning 101

As machine learning models become more and more popular solutions for automation and prediction tasks, many tech companies and data...

Aporia has been acquired by Coralogix, instantly bringing AI security and reliability to thousands of enterprises | Read the announcement

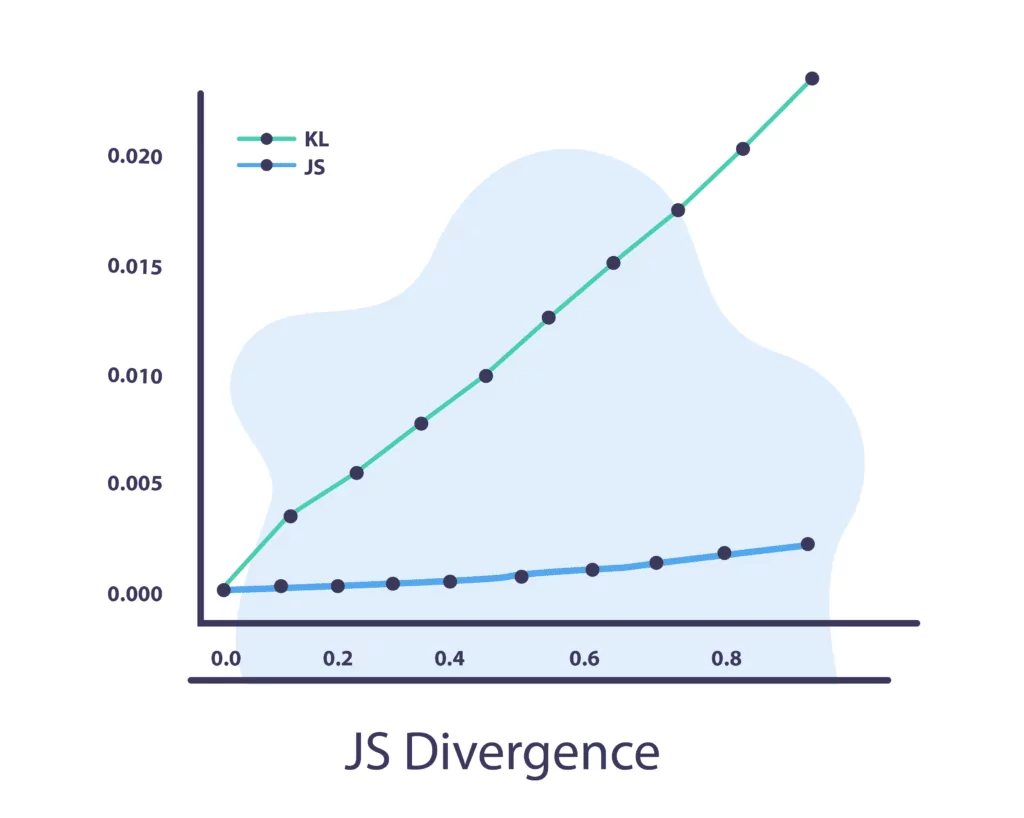

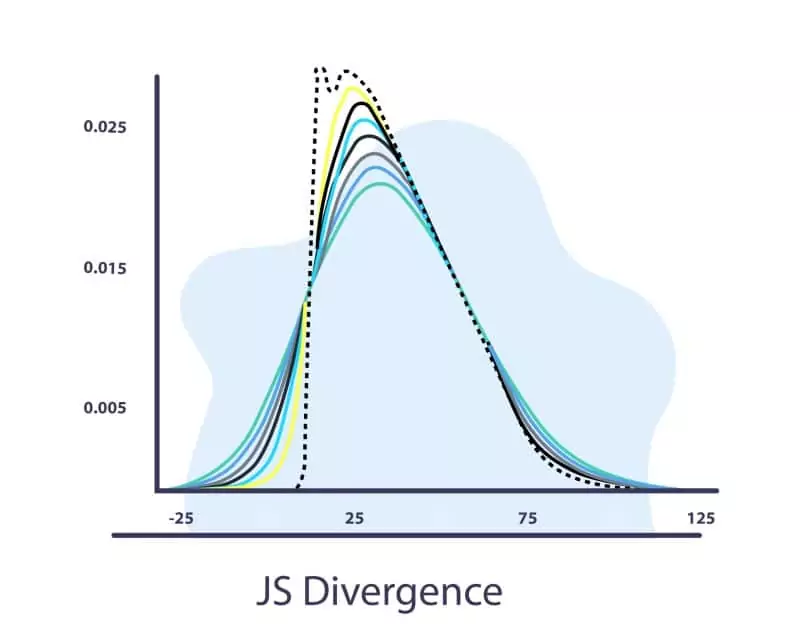

Jensen-Shannon Divergence is a type of statistical method for concept drift detection, using the KL divergence.

Where the mean between P and Q

The main differences between JS divergence and KL divergence are that JS is symmetric and it always has a finite value

Learn more about these concepts in our articles: