Concept Drift in Machine Learning 101

As machine learning models become more and more popular solutions for automation and prediction tasks, many tech companies and data...

Aporia has been acquired by Coralogix, instantly bringing AI security and reliability to thousands of enterprises | Read the announcement

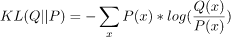

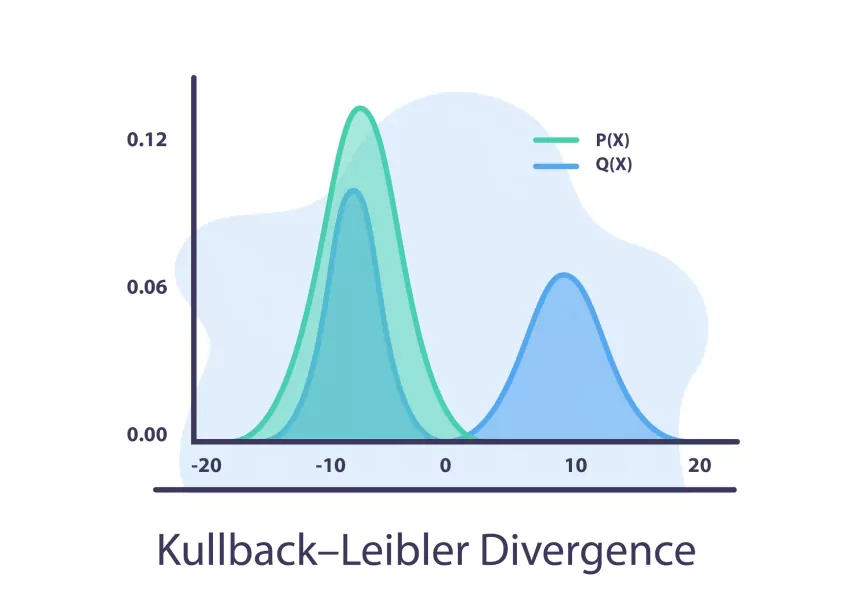

Kullback–Leibler divergence is a statistical method for concept drift detection, and is sometimes referred to as relative entropy. The KL divergence tries to quantify how much one probability distribution differs from another, so if we have the distributions Q and P where the Q distribution is the distribution of the old data and P is that of the new data we would like to calculate:

* The “||” represents the divergence.

We can see that if P(x) is high and Q(x) is low, the divergence will be high.

If P(x) is low and Q(x) is high, the divergence will be high as well but not as much.

If P(x) and Q(x) are similar, then the divergence will be lower.

If interested, learn and read more about these concepts in our articles concept drift in machine learning 101 and 8 Concept Drift Detection Methods.