How to Build an End-To-End ML Pipeline With Databricks & Aporia

This tutorial will show you how to build a robust end-to-end ML pipeline with Databricks and Aporia. Here’s what you’ll...

Aporia has been acquired by Coralogix, instantly bringing AI security and reliability to thousands of enterprises | Read the announcement

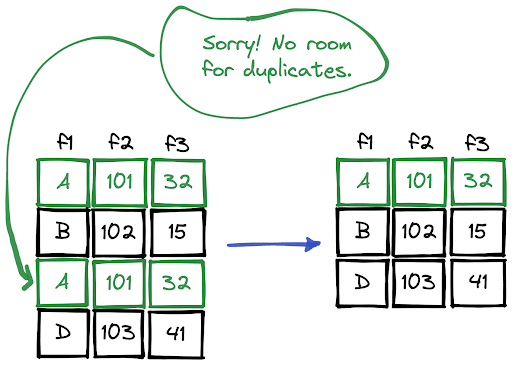

We should not have duplicate rows in a DataFrame because they cause the results of our analysis to be unreliable or simply wrong and waste memory and computation.

In this short how-to article, we will learn how to drop duplicate rows in Pandas and PySpark DataFrames.

We can use the drop_duplicates function for this task. By default, it drops rows that are identical, which means the values in all the columns are the same.

df = df.drop_duplicates()In some cases, having the same values in certain columns is enough for being considered as duplicates. The subset parameter can be used to select columns to look for when detecting duplicates.

df = df.drop_duplicates(subset=["f1","f2"])By default, the first occurrence of duplicate rows is kept in the DataFrame and the other ones are dropped. We also have the option to keep the last occurrence.

# keep the last occurrence

df = df.drop_duplicates(subset=["f1","f2"], keep="last")The dropDuplicates function can be used for removing duplicate rows.

df = df.dropDuplicates()It allows checking only some of the columns for determining the duplicate rows.

df = df.dropDuplicates(["f1","f2"])

This tutorial will show you how to build a robust end-to-end ML pipeline with Databricks and Aporia. Here’s what you’ll...

Dictionary is a built-in data structure of Python, which consists of key-value pairs. In this short how-to article, we will...

A row in a DataFrame can be considered as an observation with several features that are represented by columns. We...

DataFrame is a two-dimensional data structure with labeled rows and columns. Row labels are also known as the index of...

DataFrames are great for data cleaning, analysis, and visualization. However, they cannot be used in storing or transferring data. Once...

In this short how-to article, we will learn how to sort the rows of a DataFrame by the value in...

In a column with categorical or distinct values, it is important to know the number of occurrences of each value....

NaN values are also called missing values and simply indicate the data we do not have. We do not like...