Amir Gorodetzky

Understanding and evaluating model performance is crucial in today’s machine learning-driven world. In this guide, we will explore the ROC-AUC (Receiver Operating Characteristic – Area Under the Curve), a critical metric used in binary classification problems. Tailored for data scientists, ML engineers, and business analysts, this concise overview will equip you with insights into how to calculate and interpret ROC-AUC, its application in scenarios with imbalanced classes, and its essential role in continuous model monitoring.

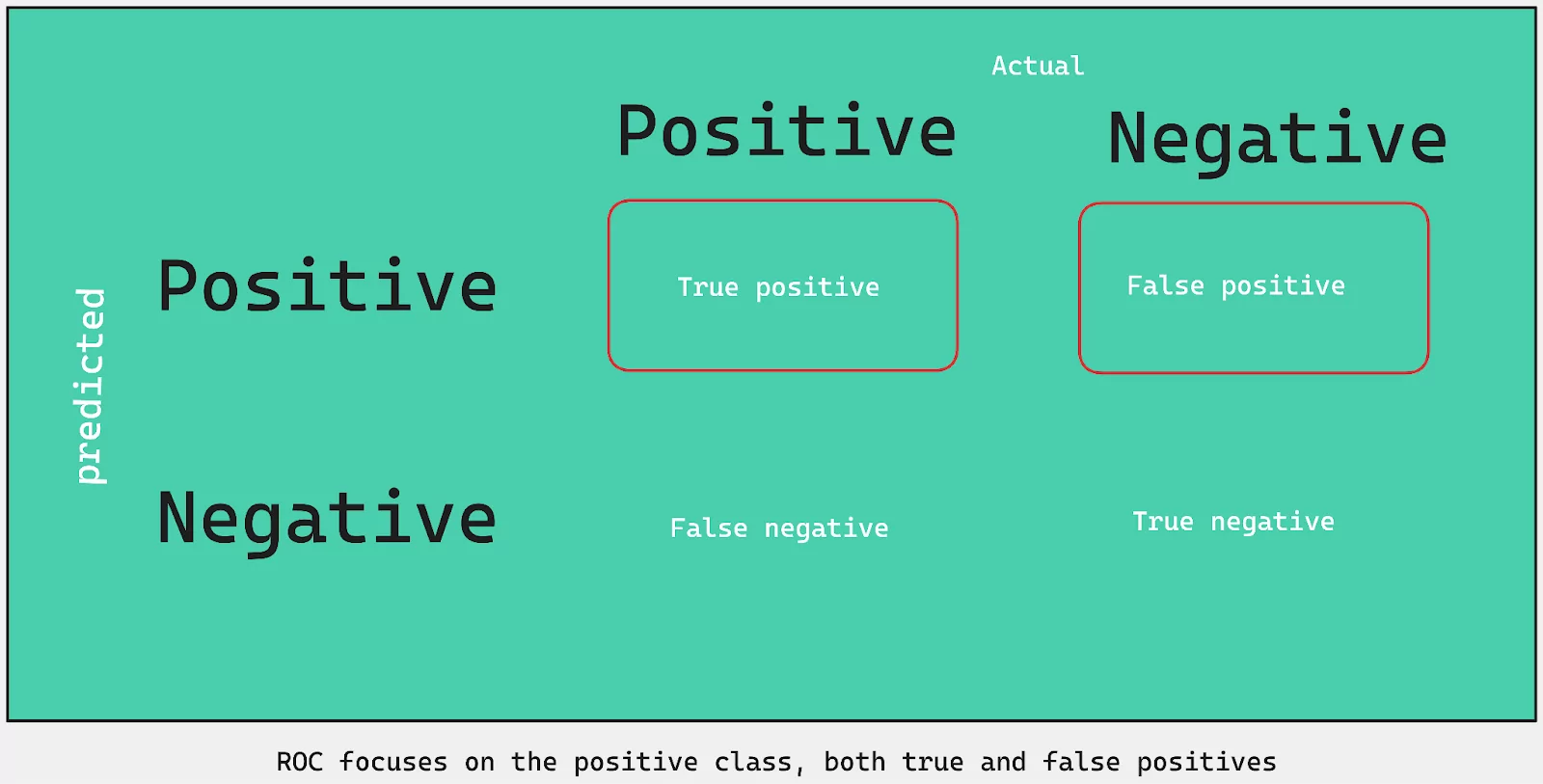

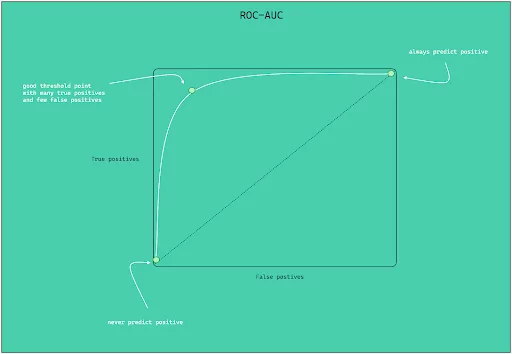

The ROC-AUC is a performance metric used in binary classification problems. It emphasizes the model’s ability to correctly identify the positive class while differentiating between true positives and false positives.

ROC AUC varies from PR AUC, as the latter focuses specifically on the performance of a model in predicting the positive class and is more informative when there is a significant class imbalance.

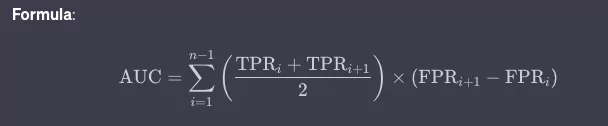

The ROC-AUC can be calculated by integrating the area under the ROC curve. It’s the summation of areas of trapezoids that form under the ROC curve.

You can calculate ROC-AUC using Python with libraries such as scikit-learn.

from sklearn.metrics import roc_auc_score

y_true = [0, 1, 1, 0, 1]

y_scores = [0.1, 0.9, 0.8, 0.2, 0.7]

auc = roc_auc_score(y_true, y_scores)

print("ROC-AUC:", auc)

ROC-AUC is mainly employed in the following scenarios:

ROC-AUC is often chosen over other metrics for its robustness and interpretability. Here’s why you might prefer it and a comparison table with other relevant metrics:

| Metric | Description | Sensitivity to Imbalanced Classes | Interpretation Across Thresholds | Focus on Positive Class Only | Application Scenarios |

| ROC-AUC | Area under the ROC curve | Moderate | Yes | Yes | Binary classification, especially when class imbalance exists |

| Recall | True Positive Rate (TPR) | High | No | Yes | When the cost of false negatives is high |

| Precision | Positive Predictive Value (PPV) | Low | No | Yes | When the cost of false positives is high |

| Accuracy | (TP + TN) / (TP + TN + FP + FN) | Low | No | No | General classification, especially when classes are balanced |

Monitoring the ROC-AUC can help you detect changes in the model’s ability to distinguish between classes. A sudden drop or variation in this metric might indicate data drift or model degradation.

ROC-AUC is a powerful metric for binary classification problems, providing insights into a model’s capability to differentiate between positive and negative classes. Regular monitoring of ROC-AUC helps maintain the efficiency of models and detect potential issues early in the production phase.